Please start any new threads on our new

site at https://forums.sqlteam.com. We've got lots of great SQL Server

experts to answer whatever question you can come up with.

| Author |

Topic |

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-10 : 11:17:18 Posted - 2015-04-10 : 11:17:18

|

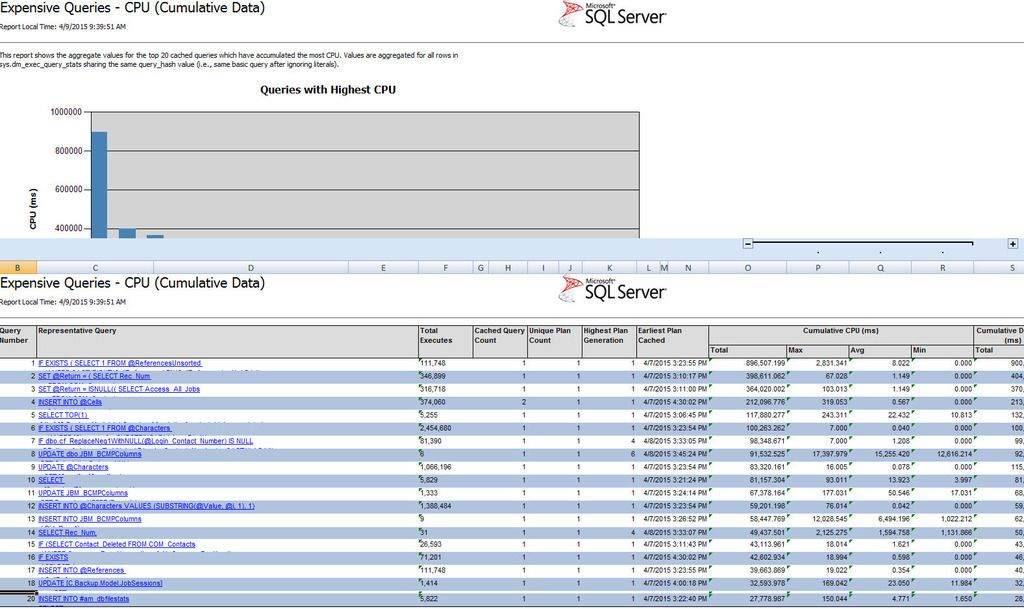

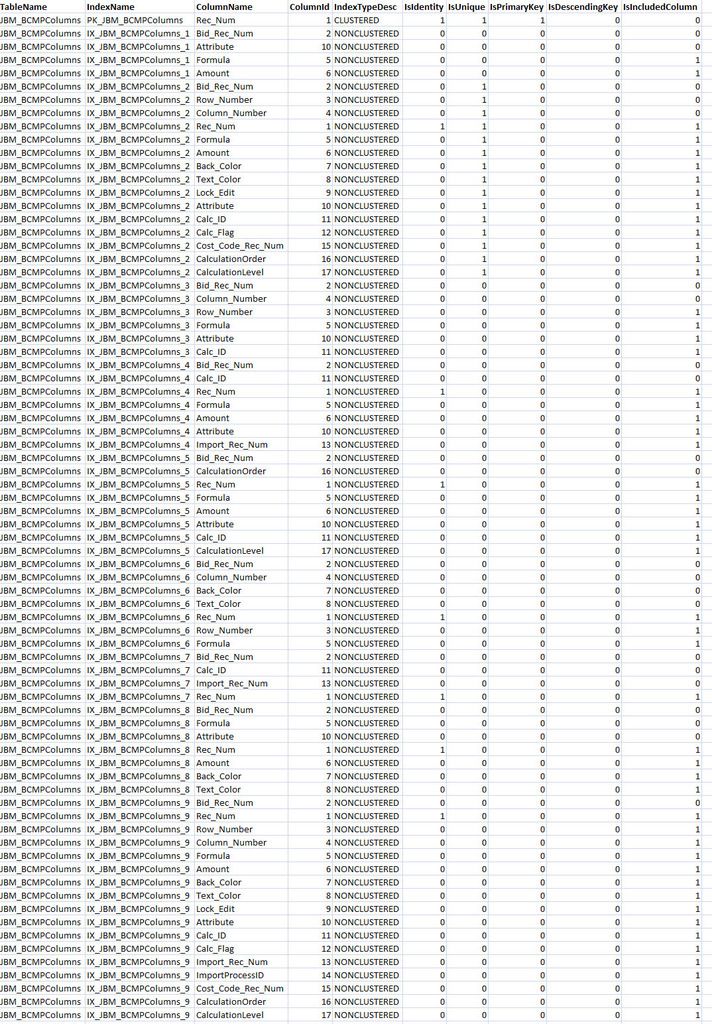

I'm new to the forum so please be patient.As an introduction, I would classify myself as a SQL Developer and not so much a SQL DBA, however, I am being asked to try and increase performance relating to a table in our database. I'm also fairly new to the company so I don't have all the answers as to why this table contains all of the indexes it contains, but I suspect that the number of indexes has a lot to do with this issue.Our client sent us the following report: And this is the list of indexes currently on what appears to be the offending table (JBM_BCMPColumns): And this is the list of indexes currently on what appears to be the offending table (JBM_BCMPColumns): As you can see, there are 10 indexes, most being non-clustered covering indexes. Based on the report and the fact that the lengthiest CPU hog is an UPDATE to the JBM_BCMPColumns table, it appears that this is the place to start.What would be next steps? Am I reading this report correctly?Thanks! As you can see, there are 10 indexes, most being non-clustered covering indexes. Based on the report and the fact that the lengthiest CPU hog is an UPDATE to the JBM_BCMPColumns table, it appears that this is the place to start.What would be next steps? Am I reading this report correctly?Thanks! |

|

|

MichaelJSQL

Constraint Violating Yak Guru

252 Posts |

Posted - 2015-04-10 : 11:46:13

Posted - 2015-04-10 : 11:46:13

|

| Find out if all the indexes are being used. if your server has been up for a while, then this should help. if it has been restarted - you will have to have enough up time and usage to determine how much each index is getting used. -- edited and added select Select object_name(dbis.object_id),i.name indexname,rCnts.index_id,isnull(dbis.last_user_seek,dbis.last_user_scan) usage,rCnts.cnt, dbis.* from sys.dm_db_index_usage_stats dbis inner join (SELECT database_id,object_id,Index_id,sum(user_seeks + User_scans + User_lookups) cnt from sys.dm_db_index_usage_stats group by database_id,object_id,Index_id ) rCnts on dbis.database_id = rCnts.database_id and dbis.object_id = rCnts.object_id and dbis.Index_id = rCnts.Index_id inner join sys.indexes i on i.index_id = dbis.index_idwhere object_name(dbis.object_id)= 'JBM_BCMPcolumns'order by 1,2 if you are not scanning , seeking against the index, you can probably lose it - but do allow enough up time for this. you want to make sure all monthly, weekly, daily uses of the table and indexes are taken into consideration . |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-10 : 11:52:06

Posted - 2015-04-10 : 11:52:06

|

Michael, is there more to the query you posted as it starts with "inner join".  |

|

|

|

MichaelJSQL

Constraint Violating Yak Guru

252 Posts |

Posted - 2015-04-10 : 11:53:34

Posted - 2015-04-10 : 11:53:34

|

| oopsSelect object_name(dbis.object_id),i.name indexname,rCnts.index_id,isnull(dbis.last_user_seek,dbis.last_user_scan) usage,rCnts.cnt, dbis.* from sys.dm_db_index_usage_stats dbis inner join (SELECT database_id,object_id,Index_id,sum(user_seeks + User_scans + User_lookups) cnt from sys.dm_db_index_usage_stats group by database_id,object_id,Index_id ) rCnts on dbis.database_id = rCnts.database_id and dbis.object_id = rCnts.object_id and dbis.Index_id = rCnts.Index_id inner join sys.indexes i on i.index_id = dbis.index_idwhere object_name(dbis.object_id)= 'JBM_BCMPcolumns'order by 1,2 |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-10 : 12:00:01

Posted - 2015-04-10 : 12:00:01

|

No problem.  As this issue is being reported by a client who uses our software and runs their own DB server, I'm assuming this query would be something I would ask them to run against their database and then send me the results? Anything else I should tell them? As this issue is being reported by a client who uses our software and runs their own DB server, I'm assuming this query would be something I would ask them to run against their database and then send me the results? Anything else I should tell them? |

|

|

|

MichaelJSQL

Constraint Violating Yak Guru

252 Posts |

Posted - 2015-04-10 : 12:03:42

Posted - 2015-04-10 : 12:03:42

|

| That is a start. find out what indexes are not being used at all and remove them. Looks at the indexes themselves to see if there commonality . you might have indexes that are very similar , but perhaps have slightly different includes. or composite indexes that could be made into includes |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-10 : 12:06:23

Posted - 2015-04-10 : 12:06:23

|

| Also, how should I read the output of this query? In other words, what are the key columns to concentrate on? I ran it against a local test database after hitting the table many times and note that I am seeing alot of data in the [indexname] column that isn't just an index relating to the JBM_BCMPColumns table.Thanks! |

|

|

|

MichaelJSQL

Constraint Violating Yak Guru

252 Posts |

Posted - 2015-04-10 : 12:17:53

Posted - 2015-04-10 : 12:17:53

|

| seeks, scans and lookups. if you have 0 all the time, then you probably are not using that index. -- but that can be verified by searching through the plans in the plan cache. again - get the up time on the environment first. If it has been less than a month, you may not have the complete picture. I.e. some indexes may be associated to procedures that run monthly . not to say you couldn't short yourself on a quarterly , but monthly or even weekly is a small enough scale to start. |

|

|

|

Kristen

Test

22859 Posts |

Posted - 2015-04-12 : 08:00:29

Posted - 2015-04-12 : 08:00:29

|

quote:

Originally posted by jkbeau1

Based on the report and the fact that the lengthiest CPU hog is an UPDATE to the JBM_BCMPColumns table, it appears that this is the place to start.

has the client found some toy and printed it off thinking its relevant?Unless I'm misreading the report and the item you referred to there are 6 instances of an UPDATE to the JBM_BCMPColumns table. Most of the queries are averaging a few MS.That's not to say that there aren't problems. but unless the client has told you of specific problems users are having e.g. "When I save a client its slow" then the fact that some queries, executing once in a while, take ages is probably irrelevant (although that query is always slow ... but if there is a huge ratio of UPDATEs to SELECTS it may well be a sensible design to favour the SELECTS. |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-13 : 08:38:12

Posted - 2015-04-13 : 08:38:12

|

| Hi Kristen,Thank you for your reply. I guess my question would be, "What would you suggest"? I'm a developer and not a DBA and our clients are responsible for their own hardware and software when it comes to SQL Server so I have no access to monitor their specific server remotely. I can only go on what my tech support department reports. Our tech support group probably has more experience on what to look for than I do when it comes to performance issues so when they start coming to me, well, that is actually a bit disconcerting. Also, having only been here about 4 months, I'm still learning the database structure (which is a mess by the way - I swear that 80% of our application is made up of stored procedures) and have no idea why there are so many indexes on this one table, let alone all that it is supposedly designed to do. I'm not looking for a specific answer, just some guidance as to how someone in my position, based on the expertise of someone who IS a DBA, would go about trying to identify the performance issue, if there is, in reality, even an issue. |

|

|

|

Kristen

Test

22859 Posts |

Posted - 2015-04-13 : 13:17:34

Posted - 2015-04-13 : 13:17:34

|

If the client doesn't have a specific performance issue then the fact that there are lots of indexes and/or some [infrequently used] queries appear to be slow, is neither here nor there IMHO.Yes there are lots of indexes. If you are selling an application to lots of different clients then maybe the indexes are there so that every client will get good performance from some query / reporting system, regardless of whether they use all the "User Defined" fields, or not, and regardless of whether they query by This Column or That Column.If, OTOH, you offer a fully-tailored fully-optimised service then I would expect to only see indexes needed by the specific client.The indexes appear to have names which are sequentially numbered. Suggests to me that they were mechanically generated, rather than being maintained as part of the application (you can call an index whatever you like, of course, by hand-built indexes are more likely to have names relevant to the columns they contain, or the jobs they do). So perhaps they were creating by some Optimisation Tool? (SQL does have such a thing). So that would raise the question as to whether one of your guys created them, or whether some "little knowledge is a dangerous thing" end user did - if it is the latter it's hardly your problem, eh??Sounds like you don't have direct access to their server? (Would be handy if you did, we wouldn't take on support of a client without direct access to their server). I would look at the creation (or maybe "modification") date of the indexes. Are they all on the same date? Was that a date when your guys were likely to have created them? (Same date as the table was created would suggest "yes") ... otherwise maybe the client created them. If so send the client the invoice!If the client DOES have performance problems I would start with a review of what is most likely causing the problem. For me that would NOT start with "What table has the most indexes" (although it might end up there!!). I would use SQL Profiler to see what the most expensive queries were, and also the most frequently used queries (a query which runs quickly, but is called 1-million times a day, off which you can shave a couple of milliseconds, is going to do more good than fixing a query called once a day that takes 3 minutes to run ...). Most poor-performance situations have a relatively-frequently-running query that performs either somewhat-badly or appallingly-badly  But poor performance might not be a SQL Query Optimisation issue at all. The server might be co-hosting SQL and IIS ... or something else ... and both might be arguing over who can have the most memory ... there's an unlimited pot of what the cause might be. But there are lots of articles on how to go about finding the bottleneck, and then fixing it. Experience helps of course, but its a handy project for a Newbie to get their teeth into - lots of stuff, and therefore knowledge, comes out of the woodwork in the process. Depends how long your Boss wants to let you tinker with this thing ... if s/he's not bothered I should spend as much time as you can - folk here will answer questions you ask, its more interesting than Students posting their homework expecting us to answer it for them!!"I swear that 80% of our application is made up of stored procedures"I don't have a problem with that But poor performance might not be a SQL Query Optimisation issue at all. The server might be co-hosting SQL and IIS ... or something else ... and both might be arguing over who can have the most memory ... there's an unlimited pot of what the cause might be. But there are lots of articles on how to go about finding the bottleneck, and then fixing it. Experience helps of course, but its a handy project for a Newbie to get their teeth into - lots of stuff, and therefore knowledge, comes out of the woodwork in the process. Depends how long your Boss wants to let you tinker with this thing ... if s/he's not bothered I should spend as much time as you can - folk here will answer questions you ask, its more interesting than Students posting their homework expecting us to answer it for them!!"I swear that 80% of our application is made up of stored procedures"I don't have a problem with that  at least 99% of our application is Stored Procedures rather than "Application Code". at least 99% of our application is Stored Procedures rather than "Application Code". |

|

|

|

Kristen

Test

22859 Posts |

Posted - 2015-04-13 : 13:19:50

Posted - 2015-04-13 : 13:19:50

|

| P.S. If you can check another implmentation of the application then does it, too, have all those indexes?Yes: Ask the DEVs whyNo: Perhaps the client created them ... |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-14 : 08:44:17

Posted - 2015-04-14 : 08:44:17

|

| Thanks Kristen.Yes, we deploy the database with our application so all clients have the same, more or less, depending on the version of our software they are using. Some clients are small and therefore performance never becomes an issue as they are using SQL Server Express...others are mid-size to large and are using the Enterprise version of SQL Server which requires they have some semblence of in-house knowledge as to how to install and configure SQL Server. That is where our tech support team comes in and they can help them quite a bit.I feel I am at a disadvantage as all of the information I get is second hand through our tech support team and I have no way of contacting the client directly. I'm just asked to "fix it". Well, if I can't monitor it and only get snippets of what is happening, it is hard to "fix it".Luckily I do not have a boss hovering over my shoulder asking "Is it fixed yet?" And it doesn't appear at this time to be a major issue.I've sent an e-mail to my other developers to see if they have any insight as to the indexes on this table.Would obtaining a copy of the client's DB and looking at it here be of any help do you think? I know I wouldn't be looking at it with their SQL Server environment, but it may help, no? |

|

|

|

Kristen

Test

22859 Posts |

Posted - 2015-04-14 : 20:35:00

Posted - 2015-04-14 : 20:35:00

|

quote:

Originally posted by jkbeau1

Would obtaining a copy of the client's DB and looking at it here be of any help do you think? I know I wouldn't be looking at it with their SQL Server environment, but it may help, no?

Yup, that would do. Its not the same as looking at their machine direct, as you have already surmised. But the only difference is if they have some other, hardware related, problem - such as less memory than your server or (most likely!) lots of concurrent users, which might bring to light a more specific performance problem.So:Restore a copy of their DB onto your server.Experiment with some queries. In terms of looking at Query Plans and Logical reads (and assuming same SQL version on Client's and Your server) then I would expect them to be identical.You would certainly be able to look at the Create date of the indexes and some other investigations like that. |

|

|

|

jackv

Master Smack Fu Yak Hacker

2179 Posts |

Posted - 2015-04-15 : 01:48:19

Posted - 2015-04-15 : 01:48:19

|

| @jkbeau - is the database being maintained properly? i.e statistics and index maintenance? An aspect of performance tuning is asking the right question? is it a performance , efficiency or capacity issue?http://www.sqlserver-dba.com/2013/02/sql-performance-tuning-asking-the-right-question.htmlHave you checked if other tasks are running? How long should it take?Just by looking at top queries by CPU , won't necessarily give you the insight\method to fix the problemJack Vamvas--------------------http://www.sqlserver-dba.com |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-15 : 08:03:57

Posted - 2015-04-15 : 08:03:57

|

| Thanks Jack and Kristen.Jack, good questions. Our tech support is the primary point of contact but I can certainly send those questions to them to pass along. I don't know.Tech support told me that as far as their hardware is conncerned they verified the performance issue is not being caused by inadequate hardware on their SQL server. Plenty of resources available. They have two instances of SQL running so our tech support person allocated 17GB to the instance related to our application, 4 GB for the SharePoint instance, and 3GB for the OS. No change in performance after this change. They also looked for other applications that could be interfering with SQL and found none.Awesome link by the way...thank you! |

|

|

|

Kristen

Test

22859 Posts |

Posted - 2015-04-17 : 06:58:43

Posted - 2015-04-17 : 06:58:43

|

| So what is the performance issue as described by the client? (I don't need to know the exact details, which are probably confidential, just something like "When they Add record-A / run Report-B / Search for Customer-C" it is slow (where "slow" = "X=seconds" and client would like that to be no more than "Y-seconds")I don't think (unless I missed it??) we've had that, as I think?? I've only seen "There appear to be too many indexes" and "One very rarely used insert runs very very slowly" {and as already said I don't think either of those are real-world issues, just pedantic ones!] |

|

|

|

jkbeau1

Starting Member

12 Posts |

Posted - 2015-04-17 : 08:35:15

Posted - 2015-04-17 : 08:35:15

|

| Basically performance when opening certain tabs on a page. We have a page called "Bid Recap" that contains multiple tabs that contain grids that mimic Excel. So there are a lot of rows and cells. We have tables that support all of this right down to the attributes for each "cell". When they open the "Bid Recap" page for the first time, it takes 30 seconds to 3 minutes, depending on the client, to open and populate the tabs. I'm pretty certain this is due to the amount of indexes on the table I identified earlier in this post as based on the report, it is slowest when perform INSERTs and UPDATEs on this table. But it seems as though everyone is saying that the DB needs to be monitored over time. I have sent them some index queries to run and they all report that the indexes on the table in question are being used effectively. So I'm not sure what to do at this point. |

|

|

|

Kristen

Test

22859 Posts |

Posted - 2015-04-17 : 09:47:12

Posted - 2015-04-17 : 09:47:12

|

That explanation is very helpful thanks.My info below is Very Broad Brush. I am guessing your level of SQL knowledge, the fact that you appear to be smart  and that the following will get you a decent Step Up the ladder. There is a lot more to it than this, and the things that I say don't hold true in all circumstance, but hopefully it will give you a "nose" for what is worth investigating / spending your time on. and that the following will get you a decent Step Up the ladder. There is a lot more to it than this, and the things that I say don't hold true in all circumstance, but hopefully it will give you a "nose" for what is worth investigating / spending your time on.quote:

Originally posted by jkbeau1

Basically performance when opening certain tabs on a page. We have a page called "Bid Recap" that contains multiple tabs that contain grids that mimic Excel. So there are a lot of rows and cells. We have tables that support all of this right down to the attributes for each "cell". When they open the "Bid Recap" page for the first time, it takes 30 seconds to 3 minutes, depending on the client, to open and populate the tabs

I'm making a grand assumption that there is no UPDATE or INSERT in just "opening a TAB", but rather loads of SELECTs.Can't see that the number of indexes matters at this point (although I explain below one scenario where it would). SQL wants to select by the most efficient means possible. If there is a really suitable index then it will use that ...However, when you FIRST pose the query SQL will scratch its beard and say "I wonder what would be the best way of retrieving the data to satisfy this query". Having decided "How To" it will then cache that Query Plan and the next time it gets IDENTICAL SQL it will match it to that query plan and not waste time trying to figure out a new Query Plan.If you ask SELECT Col1, Col2 FROM MyTable WHERE MyDate = '17Apr2015'then that is NOT the same as asking for "18Apr2015" (SQL is smart enough to detect some differences as being "the same", but "it depends" ...)If you instead sayDECLARE @MyDate DATESET @MyDate = '17Apr2015' -- (Most likely this would actually be a parameter to a Stored ProcedureSELECT Col1, Col2 FROM MyTable WHERE MyDate = @MyDateThen even for "18Apr2015" the actual SQL SELECT statement will be IDENTICAL.If your guys are using dynamic SQL where they construct the whole syntax of the SQL and THEN pass it to SQL Server it is entirely possible that they are presenting a "different" query EVERY time and SQL has to make a BRAND NEW query plan EVERY TIME.OK, so how does SQL make a Query Plan?it will check to see if an index is likely to help. so how does SQL answer the question "Will Index #1 help?"First up SQL will be interested if Index #1 includes the MyDate column. Then SQL will say "The likelihood is that if I use Index #1 I will get 10 records for that date". How does SQL know (as in "guess" !!) this? it keeps "statistics" for each index that broadly-speaking indicate the distribution of data in the index, so SQL can "predict" that there will be 10 matching rows.If an index is UNIQUE on MyDate then that is going to score highly  If Index #1 doesn't include MyDate at all then SQL will have to check every row in the index, and use that to load each row in the database to then check for MyDate. Clearly it is not going to do that ... but when your query includes a WHERE clause on 10 columns, and JOINs to 5 other tables, there are all sorts of possible combinations - and SQL will assign a "query cost" to using each index. The "cheapest query cost" will then win, and that will be the Query Plan that gets used (and cached)Now then ... you've probably already spotted this!: you have a LOT of indexes If Index #1 doesn't include MyDate at all then SQL will have to check every row in the index, and use that to load each row in the database to then check for MyDate. Clearly it is not going to do that ... but when your query includes a WHERE clause on 10 columns, and JOINs to 5 other tables, there are all sorts of possible combinations - and SQL will assign a "query cost" to using each index. The "cheapest query cost" will then win, and that will be the Query Plan that gets used (and cached)Now then ... you've probably already spotted this!: you have a LOT of indexes  . So SQL is not just going to say "Is Index #1" any good, its going to thumb though all the way to "Is index #100 any good". That MAY VERY WELL take longer than actually running the query itself!!! That said, that does NOT matter provided that SQL caches the Query Plan AND you use the Exact Same (or near enough that SQL is happy) query again .. and Again ... AND AGAIN AND AGAIN AND AGAIN . So SQL is not just going to say "Is Index #1" any good, its going to thumb though all the way to "Is index #100 any good". That MAY VERY WELL take longer than actually running the query itself!!! That said, that does NOT matter provided that SQL caches the Query Plan AND you use the Exact Same (or near enough that SQL is happy) query again .. and Again ... AND AGAIN AND AGAIN AND AGAIN  (Of course IF the issue is an INSERT then the cost will ALWAYS be high because SQL will have to insert a new value in EVERY index for EACH record Inserted - fewer indexes WILL be quicker, in the case of Inserts. It is far more common that systems READ for 100's, 1,000's etc. time more than the number of inserts. In an ideal insert-only database you would have NO secondary indexes to boost Insert-speed)If your DEVs are generating Dynamic SQL which is different every time then the chances are that SQL NEVER finds a query plan that already exists that matches the query (short of a user just pressing REFRESH !)If that turns out to be the case then best that your DEVs change to either using Stored Procedures OR using Parametrised SQL statement - so they still construct the query dynamically but they NEVER put a fixed value in the query, but instead the put a @ParameterVarible in.So you might wind up with User A asking:SELECT Col1, Col2 FROM MyTable WHERE MyDate = @SomeDateand user B asking:SELECT Col1, Col2 FROM MyTable WHERE MyDate = @SomeDate AND MyTotalAmount > @SomeAmountBUT you have NOT got an infinite number of (slightly) different queries, what you have got is some queries which are "popular" and get asked over-and-over. You will also have some that are only ever asked once - they will still be slow. I doubt clicking on a TAB poses a unique SQL question, I think more like that it would post a "standard" or failing that "popular" query - but on the assumption that the query is ParametrisedI have been paid good consultancy money to walk into a new client with a performance problem, explain this to their developers, luckily they have centralised all their database-query code in their APP so the changes they had to make only took a couple of hours, and then they have (I kid you not) a 100x performance improvement.You may have spotted that there is another possible issue here. The Query Plan is only as good as the Statistics on the Index. If they were last rebuild a year ago they are probably no use whatsoever! SQL has some "rules" that cause it to say "Chuck the statistics away and make some new ones". e.g. "After every 1,000 inserts make new statistics". You can also force statistics to be rebuilt, e.g. as a scheduled task - so you can CHOOSE to do that in the middle of the night, rather than at the busiest time of the day just as someone enters the 1,000th transaction!!There is also a setting to force statistics to be recalculated Asynchronously. The default is Synchronous which basically causes SQL to say "I have decided to recalculate statistics for MyTable ALL queries to that table will now HOLD until I finish my rebuild". That causes an immediate slowdown for all uses effected. It might only be for a second, but it is a cause of people saying that the system sometimes runs slowly.Asynchronous means that SQL says "Oops! I now need to recalculation statistics on MyTable, sorry everyone may get terrible performance until I have done this, but I'll let your queries through anyway". If you queried the table one second earlier your query would have run in X-seconds, its not going to run appreciably slower now (but may run A BIT or even MUCH faster after statistics are refreshed) so why not just let your query run anyway, rather than HOLD it. (Setting Async stats refresh is a simple database-level setting)You said that you might be able to get a copy of the client's database (if not, from the sound of it, various clients have various different performance speeds, probably depending on volume of data, so the data for "any client" may well be good enough to start with). What that will let you do is "capture" the actual Query Plan and analyse it. There are tools to do this, they are NOT for the faint-hearted, but equally they are the hub of performance tuning, so it is great knowledge and skill gained.Basically you can SPY on SQL (using SQL Performance Monitor). You will see every SQL statement sent to the server (in practice you filter it to just a single workstation / database / whatever). So you perform the "slow operation" in your APP clicking on the relevant TAB in your application and at the same time Performance Monitor will capture all the SQL statement(s). You can, of course, at that point see whether the SQL is "unique" or "parametrised" (Of course IF the issue is an INSERT then the cost will ALWAYS be high because SQL will have to insert a new value in EVERY index for EACH record Inserted - fewer indexes WILL be quicker, in the case of Inserts. It is far more common that systems READ for 100's, 1,000's etc. time more than the number of inserts. In an ideal insert-only database you would have NO secondary indexes to boost Insert-speed)If your DEVs are generating Dynamic SQL which is different every time then the chances are that SQL NEVER finds a query plan that already exists that matches the query (short of a user just pressing REFRESH !)If that turns out to be the case then best that your DEVs change to either using Stored Procedures OR using Parametrised SQL statement - so they still construct the query dynamically but they NEVER put a fixed value in the query, but instead the put a @ParameterVarible in.So you might wind up with User A asking:SELECT Col1, Col2 FROM MyTable WHERE MyDate = @SomeDateand user B asking:SELECT Col1, Col2 FROM MyTable WHERE MyDate = @SomeDate AND MyTotalAmount > @SomeAmountBUT you have NOT got an infinite number of (slightly) different queries, what you have got is some queries which are "popular" and get asked over-and-over. You will also have some that are only ever asked once - they will still be slow. I doubt clicking on a TAB poses a unique SQL question, I think more like that it would post a "standard" or failing that "popular" query - but on the assumption that the query is ParametrisedI have been paid good consultancy money to walk into a new client with a performance problem, explain this to their developers, luckily they have centralised all their database-query code in their APP so the changes they had to make only took a couple of hours, and then they have (I kid you not) a 100x performance improvement.You may have spotted that there is another possible issue here. The Query Plan is only as good as the Statistics on the Index. If they were last rebuild a year ago they are probably no use whatsoever! SQL has some "rules" that cause it to say "Chuck the statistics away and make some new ones". e.g. "After every 1,000 inserts make new statistics". You can also force statistics to be rebuilt, e.g. as a scheduled task - so you can CHOOSE to do that in the middle of the night, rather than at the busiest time of the day just as someone enters the 1,000th transaction!!There is also a setting to force statistics to be recalculated Asynchronously. The default is Synchronous which basically causes SQL to say "I have decided to recalculate statistics for MyTable ALL queries to that table will now HOLD until I finish my rebuild". That causes an immediate slowdown for all uses effected. It might only be for a second, but it is a cause of people saying that the system sometimes runs slowly.Asynchronous means that SQL says "Oops! I now need to recalculation statistics on MyTable, sorry everyone may get terrible performance until I have done this, but I'll let your queries through anyway". If you queried the table one second earlier your query would have run in X-seconds, its not going to run appreciably slower now (but may run A BIT or even MUCH faster after statistics are refreshed) so why not just let your query run anyway, rather than HOLD it. (Setting Async stats refresh is a simple database-level setting)You said that you might be able to get a copy of the client's database (if not, from the sound of it, various clients have various different performance speeds, probably depending on volume of data, so the data for "any client" may well be good enough to start with). What that will let you do is "capture" the actual Query Plan and analyse it. There are tools to do this, they are NOT for the faint-hearted, but equally they are the hub of performance tuning, so it is great knowledge and skill gained.Basically you can SPY on SQL (using SQL Performance Monitor). You will see every SQL statement sent to the server (in practice you filter it to just a single workstation / database / whatever). So you perform the "slow operation" in your APP clicking on the relevant TAB in your application and at the same time Performance Monitor will capture all the SQL statement(s). You can, of course, at that point see whether the SQL is "unique" or "parametrised"  Let's assume it is parametrised, and you think it is "pretty optimal". You can get SQL to show you the query plan for that statement. This will show you which index it is using. You might spot that it is NOT using an index that you would expect it to, or (surprisingly given your 1,000's of indexes Let's assume it is parametrised, and you think it is "pretty optimal". You can get SQL to show you the query plan for that statement. This will show you which index it is using. You might spot that it is NOT using an index that you would expect it to, or (surprisingly given your 1,000's of indexes  ) there is no suitable index available. So you can create (on your DEV/TEST database) an index that you think might help. Re-run the query and see if it is faster. If not DROP the new index.You could also try dropping ALL the 1,0000's of indexes, except the one(s) that the Query Plan is actually using, and see how much faster that is (as SQL will not have to check all the idnexes when it builds the Query Plan)You could try (manually) rebuilding Statistics (using a Full Scan as well as the default Part-Scan) and see if that helps.You can hand edit the SQL in your favourite SQL Querying Tool - if your DEVs do generate Dynamic "every-one-is-different" SQL you could hand-edit it into some Parametrised SQL and then try running that. If that makes a big difference you could then speak to the DEVs and say "try this" (or perhaps you are more likely to say "Would it be possible to change the APP to generate SQL in THIS way" ) there is no suitable index available. So you can create (on your DEV/TEST database) an index that you think might help. Re-run the query and see if it is faster. If not DROP the new index.You could also try dropping ALL the 1,0000's of indexes, except the one(s) that the Query Plan is actually using, and see how much faster that is (as SQL will not have to check all the idnexes when it builds the Query Plan)You could try (manually) rebuilding Statistics (using a Full Scan as well as the default Part-Scan) and see if that helps.You can hand edit the SQL in your favourite SQL Querying Tool - if your DEVs do generate Dynamic "every-one-is-different" SQL you could hand-edit it into some Parametrised SQL and then try running that. If that makes a big difference you could then speak to the DEVs and say "try this" (or perhaps you are more likely to say "Would it be possible to change the APP to generate SQL in THIS way"  )Point of detail: it is not, usually, fruitful to time a query to measure its actual elapsed-time. First time you run the query none of the data from the table will be in memory, second time probably ALL of the data will be in memory. (You can ask SQL to "empty memory" but on a shared server that is not popular!). What you can ask SQL to do is tell you the "Logical reads" (how many disk read [and also Writes, if Insert/Update involved] operations it predicts it will make [using its statistics]) and also the number of SCANS. A SCAN is where SQL has no direct index to use, so it scans the whole index (or the whole table) to try to find records that match the criteria. If you join a PARENT and a CHILD table and you got, say, 100 scans that would be a disastrous situation - SQL was rescanning the child table for every single row in the MASTER table. An index on the child table (on the relevant columns in the JOIN) would reduce the scan count to ONE!)So my recommendation is to get to the point where you can "spy" on SQL going to the server and then experiment with individual SQL statements to look at the Scan Count and Logical Reads and see what makes them better (adding an index for example, or parametrising the query - or asking for suggestions on SQL Team )Point of detail: it is not, usually, fruitful to time a query to measure its actual elapsed-time. First time you run the query none of the data from the table will be in memory, second time probably ALL of the data will be in memory. (You can ask SQL to "empty memory" but on a shared server that is not popular!). What you can ask SQL to do is tell you the "Logical reads" (how many disk read [and also Writes, if Insert/Update involved] operations it predicts it will make [using its statistics]) and also the number of SCANS. A SCAN is where SQL has no direct index to use, so it scans the whole index (or the whole table) to try to find records that match the criteria. If you join a PARENT and a CHILD table and you got, say, 100 scans that would be a disastrous situation - SQL was rescanning the child table for every single row in the MASTER table. An index on the child table (on the relevant columns in the JOIN) would reduce the scan count to ONE!)So my recommendation is to get to the point where you can "spy" on SQL going to the server and then experiment with individual SQL statements to look at the Scan Count and Logical Reads and see what makes them better (adding an index for example, or parametrising the query - or asking for suggestions on SQL Team  ) ) |

|

|

|

|

|

|

|

|